OpenAI: Transforming the World Through Artificial Intelligence

The story behind OpenAI, the poster child of mainstream AI adoption.

Welcome to the latest edition of ‘The API Economy’ — thanks for reading.

To support The API Economy, be one of the 2,564 early, forward-thinking people, who subscribe for monthly-ish insights on the API economy, crypto, Web3, and more emerging trends.

OpenAI — Ensuring that artificial intelligence benefits all of humanity

According to research by Deloitte, 79% of global business leaders have already fully deployed three or more types of AI. Whether it’s your company or the businesses you buy from, artificial intelligence is rapidly transforming our daily lives.

That’s why OpenAI is on a mission to build AI technologies that benefit humanity as a whole. Founded in 2015 by a group of entrepreneurs and researchers, including Elon Musk and Sam Altman, OpenAI aspires to make AI “an extension of individual human wills and, in the spirit of liberty, as broadly and evenly distributed as possible.”

Through its advancements in machine learning (ML), natural language processing (NLP), and products built on top of their own technology, OpenAI is already helping to shape the future of AI and its potential applications on track to reshape our lives.

In recent years, OpenAI has been praised for creating large language models (LLMs) like GPT-3 and DALLE. LLMs are a type of artificial intelligence that use deep learning algorithms to process and generate natural language.

And while you might not have heard about these models, if you’ve been on the internet in the past month, you’ve almost certainly read about ChatGPT. Powered by the GPT-3 language model, ChatGPT is a chatbot that went viral due to its ability to engage in human-like conversations, write code, and more.

In fact, the title of this essay was generated by ChatGPT.

And the cover image was generated by DALL·E 2 (another OpenAI product):

The implications of advancements like ChatGPT are massive. From customer service to education to healthcare, no one can deny that in the coming years, AI will play an increasingly important role in our lives.

And these advancements haven’t gone noticed.

As of January 2023, Microsoft announced a new multiyear, multibillion-dollar investment with ChatGPT-maker OpenAI — a deal rumored to inject up to $10 billion in cash into OpenAI.

These developments are exciting, and they have the potential to bring many benefits to society. However, they also raise important ethical considerations. It will be crucial for researchers and developers in the field of AI to consider the potential risks associated with these technologies.

In the not-too-distant future, in a world run by AI, how will we distinguish human creation from digitally-generated output? The data that trains models need to be compensated properly, but how? Are we at a true inflection point for AI after decades of building and experimenting?

We’ll set out to answer these questions and more in today’s deep dive into OpenAI.

Making AI cool again & again & again

And the hits just keep on coming!

In Four Seasons Total Tech, Not Boring author Packy McCormick describes the hype cycles AI has gone through & where we are today, stating:

“Winters, whether in AI, crypto, solar, or any sufficiently novel technology, are what the Gartner Hype Cycle calls the Trough of Disillusionment, that dark period after a technological trigger causes people to lose their collective minds with the possibilities of the shiny new thing.”

Which feels like a perfect description of where we are with ChatGPT.

Today, AI feels exciting. It’s just a part of life, from your phone’s facial recognition capabilities to your personalized Netflix recommendations. But did you know that less than a decade ago, Stephen Hawking warned that artificial intelligence could end mankind? Even Elon Musk said in 2017 that AI represents an existential threat to humanity.

Concerns like these are what led a group of entrepreneurs and researchers, including Musk, to found OpenAI in 2015. The goal of OpenAI is to advance the field of AI and to ensure that it is developed in a way that is safe and beneficial for humanity.

Prominent Silicon Valley players like Peter Thiel and Sam Altman collectively pledged $1 billion to OpenAI in 2015. The organization has had a few ups and downs over the years, including the departure of Elon Musk from its board of directors and an investigation by MIT Technology Review.

Even so, since 2015, OpenAI has made significant contributions to the world of artificial intelligence, especially the field of large language models.

Most recently, OpenAI developed GPT-3 (Generative Pretrained Transformer 3), a state-of-the-art language model that has been widely praised for its ability to generate human-like text. They also created DALL·E, a large language model that uses a different architecture and is designed explicitly for text-generation tasks. These models have been used in many applications, including chatbots and machine translation.

Shaking up the world with ChatGPT

Launched on November 30, 2022, ChatGPT managed to garner 1 million users within just five days. And the platform's success wasn’t short-lived — today, over a month later, our social media feeds remain flooded with information about ChatGPT’s many groundbreaking use cases.

ChatGPT — 5 Days to get to one million users.

So what is ChatGPT, and what’s the big deal?

ChatGPT is a chatbot developed by OpenAI that uses the GPT-3 language model to engage in human-like conversations. It’s designed to be able to understand and respond to a wide range of topics and inputs, making it a powerful tool for natural language processing and conversational AI.

Why does that matter? Well, with its ability to generate human-like text and respond to complex queries, ChatGPT has the potential to revolutionize the way we interact with machines and automate tasks that require language understanding.

After the launch of ChatGPT, Ben Thompson wrote in his essay ‘AI Homework’,

“What has been fascinating to watch over the weekend [of the launch] is how those refinements have led to an explosion of interest in OpenAI’s capabilities and a burgeoning awareness of AI’s impending impact on society, despite the fact that the underlying model is the two-year-old GPT-3. The critical factor is, I suspect, that ChatGPT is easy to use, and it’s free: it is one thing to read examples of AI output like we saw when GPT-3 was first released; it’s another to generate those outputs yourself; indeed, there was a similar explosion of interest and awareness when Midjourney made AI-generated art easy and free (and that interest has taken another leap this week with an update to Lensa AI to include Stable Diffusion-driven magic avatars).”

Who can forget DALL·E & DALL·E 2?

The original DALL·E model was developed by OpenAI in 2020 and was named after the artist Salvador Dali and the cartoon character Wall-E. It was a generative model that combined natural language processing with image generation, allowing it to create photorealistic images from text descriptions. Like ChatGPT, DALL·E uses GPT-3.

In fact, originally, DALL·E was created as a 12-billion parameter version of GPT-3. It was trained to generate images from text descriptions, using a dataset of text–image pairs.

DALL·E was built with a diverse set of capabilities, including creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.

While the original DALL·E model was able to generate images from text descriptions, it had some limitations. This led to the release of DALL·E 2 in early 2021. DALL·E 2 can do everything the original DALL·E did, but it can also generate videos, 3D objects, and animations, as well as perform other tasks such as image editing and manipulation.

All of this makes DALL·E 2 a more versatile and powerful AI system than the original DALL·E model. According to OpenAI, “DALL·E 2 is preferred over DALL·E 1 for its caption matching and photorealism when evaluators were asked to compare 1,000 image generations from each model.”

DALL·E 2 hasn’t become as mainstream as ChatGPT for many reasons, including the fact that the tool’s outputs can be biased and even racist. Khari Johnson of Wired wrote:

“By OpenAI’s own admission, DALL-E 2 is more racist and sexist than a similar, smaller model. The company’s own risks and limitations document gives examples of words like “assistant” and “flight attendant” generating images of women and words like “CEO” and “builder” almost exclusively generating images of white men.”

OpenAI is on a mission to make sure AI is beneficial for all humanity, but DALL·E 2 certainly still has some room for improvement.

The key to AI: language models

Large language models (LLMs) are a type of artificial intelligence that is trained to understand and generate human language. It can be used to perform a variety of tasks such as language translation, text summarization, text generation, and more. It uses a lot of data, typically in the form of text, to learn how to understand and generate language.

Basically, LLMs can produce a word sequence based on the probability of certain words following each other, in a way that resembles the way people write. Think of it as a robot that can understand and talk like a human.

These models have become increasingly popular in recent years for their ability to perform a wide range of language-related tasks, such as translation, summarization, and text generation.

Large language models are trained on massive amounts of text data and are extremely adept at generating human-sounding text. As a result, LLMs are already being used across industries and will continue to transform the world as we know it.

Here are just a few things LLMs can be (and have been) used for:

Writing blog posts

Crafting poetry

Creating memes

Writing recipes

Creating comic strips

Generating code snippets

Translating content

Helping us write this lengthy essay!

Ultimately, large language models are a key part of modern AI research and before we know it, will change the way we go about our daily lives.

How LLMs are eating the world

Large language models go all the way back to 1966. That year, Joseph Weizenbaum of MIT developed ELIZA, a language model that mimicked human conversion. ELIZA used simple rules to react to user input naturally and conversationally — in fact, it was the first program to utilize natural language processing (NLP).

We’ve come a long way since ELIZA. Today, ChatGPT (and its underlying language model) can do so much more than Weizenbaum could’ve imagined. Let’s take a closer look at the endless ways LLMs and AI are being applied today, across every aspect of our lives.

AI Homework

The first obvious casualty of large language models is homework. From parents looking for help with their children’s homework to college students struggling with a last-minute deadline, ChatGPT might seem like the perfect solution. In fact, it’s already written some A+ college essays.

The real training for everyone, however, and the best way to leverage AI, will be in verifying and editing information. At the end of the day, ChatGPT is just a tool, and it can get confused just as easily as a human (does that in some ways, make it MORE human? 🤔).

Ben Thompson of Stratchery shared a great example of this after testing ChatGPT on his daughter’s homework. ChatGPT responded to the prompt “Did Thomas Hobbes believe in the separation of powers” very confidently — but completely incorrectly. The platform confused Hobbes’s views with those of his contemporary John Locke.

The two philosophers are often quoted together and discussed across the same texts, so in some ways, this doesn’t come as a surprise. What this means is that while ChatGPT might be a helpful “assistant” for students, they’ll still need to fact-check their work and potentially rewrite some of the chatbot’s less logical arguments.

Robinhood Snacks talked about AI homework in a recent piece: “AI gets (scary) creative… Generative AI, which can convert text prompts into images and articles, made a splash last year. OpenAI released its futuristic chatbot, ChatGPT, to the public last month, shortly after launching its image generator, DALL-E. The impressive tools caused shock and awe — and raised fears they could replace creative professions like copywriting and design (Jasper, a genAI tool used for marketing copy, hit a $1.5B valuation). Some teachers are convinced genAI will kill homework too. While unknowns abound, investment is booming as companies seek innovative ways to automate work.”

AI Media

As artificial intelligence becomes more advanced, it may be bringing us into a new era — one of “endless media.” Mario Gabriele of The Generalist recently wrote that soon, AI, “may match or surpass human abilities across mediums, leading to a world in which creating a film, comic, or novel can be done on demand, ad infinitum.”

In reality, that future is probably closer than we think. As just one example, the AI content platform Jasper.ai has skyrocketed in popularity recently, garnering over 50,000 users.

Utilizing OpenAI’s GPT-3 model (the same one ChatGPT is built on top of) Jasper is trained to write original, creative content. Entrepreneurs, marketers, and writers typically use the platform as an assistant for brainstorming as well as writing blog posts, social media, Facebook advertisements, and more.

Meanwhile, in the field of journalism, some companies have gone so far as to develop AI systems that can write news articles with a similar style and quality to those written by human journalists. However, the quality may vary, and often, it's hard for AI systems to deal with complexity and abstract concepts, which are a crucial part of journalism.

AI is a powerful tool that has the potential to improve the efficiency and accuracy of many tasks in media, from information gathering and analysis to content creation and distribution. However, it also raises important ethical and social questions, such as the need for transparency and accountability in the use of AI in journalism.

AI Programming

Artificial intelligence also has the potential to improve the efficiency and accuracy of mundane tasks in programming, from code generation to debugging and testing.

First, let’s discuss debugging. AI-based systems can be used to automatically detect and fix errors in code, such as security vulnerabilities. This can help to improve the quality and reliability of software.

By automating the debugging process, AI can save developers time and reduce the number of bugs in software. However, AI-based debugging systems are not perfect and can still miss certain types of errors or even introduce new ones.

Just as with homework, it will be important for developers to combine AI-based debugging with manual debugging and code review. They’ll still save hours of manual labor and improve efficiencies in the long run.

AI can also, and has been, used in programming for:

Code generation: Using a set of input examples and a defined set of rules, AI can help automate repetitive tasks and make the programming process incredibly efficient.

Refactoring: AI-based systems can be used to automatically optimize and reorganize code, making it more readable and maintainable.

Completion: AI-based systems can be used to suggest code completions for programmers, based on their current context (like how your email helps complete your sentences).

Test Generation: AI can be used to test a large set of scenarios and inputs, which ensures that the software is working as expected.

By automating some mundane tasks and massively improving efficiency, AI is bound to have a huge impact on this field.

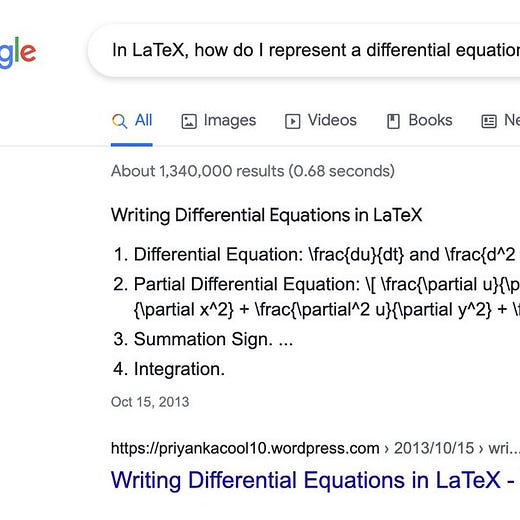

AI Search Engine

Search engines like Google, Bing, and Yahoo already use artificial intelligence (AI) in a number of ways to improve search results and make those results more relevant for users. As just one example, Microsoft, who owns Bing, is an investor in OpenAI and is hoping to greatly improve the search engine with the help of AI.

Recently Microsoft announced their extended partnership with OpenAI & other companies like IBM are quickly following suit.

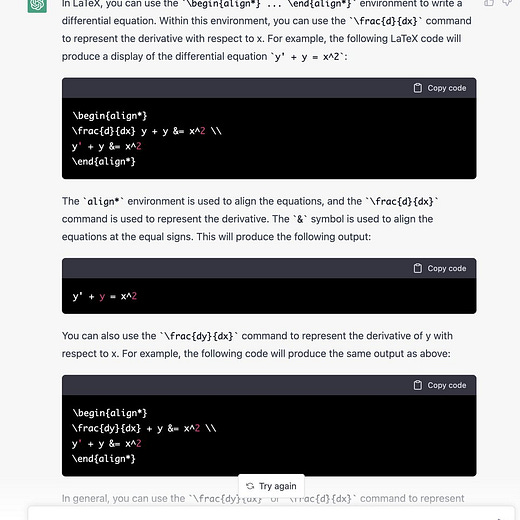

But the question that some people have been asking is, will ChatGPT and other AI replace search engines? ChatGPT doesn’t seem to think so.

The chatbot makes a good point. Search engines and chatbots built on language models were built with two very different purposes — not to mention, unlike Google, ChatGPT won’t be free forever. OpenAI decided to launch it to the public for free last year as a way to gain feedback, but they’ve already opened a waitlist for a paid, Pro version.

Besides accessibility, GPT-3 and other language models are simply limited in what questions they can answer. They can only provide information based on the data they’re trained on, which isn’t always up to date — ChatGPT’s input, for example, only goes up to 2021.

In contrast, search engines like Google use a combination of advanced algorithms and machine learning to crawl the web and index billions of pages, which allows them to provide a wide range of information on any topic.

Ultimately, search engines and AI will work together to give us the most advanced, accurate access to information possible. So far, the use of AI in search engines has made them much more sophisticated and able to provide users with more relevant and personalized results, improving the user experience.

AI Translation

Artificial intelligence also has a few fascinating applications for translations between languages. These advancements in AI-assisted translation are incredibly exciting because they have the potential to make translation more accurate, efficient, and accessible.

For instance, using neural machine translation (NMT) technology can result in translations that sound more natural and are easier to understand. This can be especially beneficial for businesses looking to expand into new markets, as it can help to improve communication and build stronger relationships with customers.

When you see the difference between the outputs of ChatGPT and Google Translate, it’s clear that this technology has the potential to make a massive impact on how we communicate cross-culturally and around the world.

Additionally, AI-powered tools can be used to pre-process text, making the translation process faster and far more efficient. These tools can also post-edit translations, which can help to improve the overall quality of the final product.

Developments in AI-assisted translation can open up new opportunities for businesses and individuals to connect with people from different cultures and backgrounds. Not to mention, it can help us all to better understand and be understood in different languages. It's a really exciting time for the field of AI and translation.

AI Idea Generation

Some career paths require us to constantly share new, fresh content, whether you’re the marketing director of a crypto company, a LinkedIn thought leader, or an Instagram influencer. So how do you keep up with it all?

Artificial intelligence can help. Besides directly asking a chatbot to come up with ideas for you, Ethan Mollick of Fast Company writes, “Chatting with an AI about ideas lets you explore angles, approaches, and—most importantly—weirdness that you might never have thought of on your own.”

Try as you might, it can be challenging to constantly come up with a fresh new perspective on a topic you’re super familiar with. AI has the potential to not just be an idea machine, but to actually have human conversations with us about how we approach things.

In fact, one of the coolest things about ChatGPT, specifically, is how you can make it “act the part.” If you’re conducting market research, inventing a new product, or simply curious about a new perspective, AI can help you out. It (probably!) won’t ever replace the effectiveness of a real human interview, but it’s a great start.

AI Lawyers

The potential applications of AI are limitless

AI technology is advancing at an incredible pace and its potential future applications are truly limitless. Healthcare professionals can use AI to analyze medical images and make diagnoses more quickly and accurately. Financial institutions can use AI to detect and prevent fraud. App developers can use AI in transportation to optimize routes and improve efficiency.

In addition, AI can be used to automate repetitive tasks, allowing people to concentrate on more creative and fulfilling tasks. It can improve the customer experience by providing more accurate predictions and personalized recommendations. And it can help us discover data patterns that cannot be detected by humans alone.

With all the advancements and accessibility of AI, new AI companies are launching and getting funded on what seems like a daily basis.

The possibilities are truly endless, and as the technology continues to improve, we will see even more innovative and groundbreaking applications of AI.

Navigating the usage of IP in AI model training

In recent years, artists have had some… concerns about AI-generated art. The data AI models use in the learning process comes from many sources, including books, music, social media, films, etc. With this data, the model ultimately develops an understanding of that specific type of IP and later can generate new variations of the same IP.

You can see why creators aren’t happy about that. When an AI model uses IP, this can infringe on the rights of the creators who may not have given permission for their work to be used in this way. Additionally, using someone else's IP to train an AI model can make it difficult to determine who is responsible for the resulting model and who should be compensated for its creation.

This has been a large and public issue for many “AI art” platforms. In fact, just days ago, a leading class action law firm (Joseph Saveri Law Firm, LLP) filed a lawsuit against several different AI art platforms, including Stability AI, DeviantArt, and Midjourney. The lawsuit was raised on behalf of plaintiffs seeking compensation for damages caused by these companies and an injunction to prevent future harms.

These AI art tools are built on the deep learning, text-to-image model called Stable Diffusion, a competitor to OpenAI’s DALL·E. Stable Diffusion is trained on pairs of images and captions taken from LAION-5B — including billions of allegedly copyrighted images.

Companies are slowly beginning to address artist backlash. For one, DeviantArt announced last year that it would allow artists to opt out of AI art generations. Their CEO shared the following with TechCrunch regarding the feature:

“AI technology for creation is a powerful force we can’t ignore. . . . It would be impossible for DeviantArt to try to block or censor this art technology. We see so many instances where AI tools help artists’ creativity, allowing them to express themselves in ways they could not in the past. That said, we believe we have a responsibility to all creators. To support AI art, we must also implement fair tools and add protections in this domain.”

OpenAI has also made moves to respond to artist concerns by licensing some of the images in DALL-E 2’s training data set. However, the license was limited, so the issue of IP remains in question.

There are many legal and ethical considerations when it comes to AI and intellectual property. However, AI is a new and rapidly advancing technology, which means there’s little precedent for how to handle these issues.

Could NFTs be the solution for compensating creators?

As the world slowly moves towards a Web3 world (where blockchain technology will rule the decentralized internet), non-fungible tokens, or NFTs, could be the solution to AI’s IP problem.

NFTs are stored on the blockchain in a decentralized ledger, which makes them extremely difficult to counterfeit. Because they’ve brought art ownership to the “masses,” NFTs have skyrocketed in popularity over the past couple of years. At the end of 2022, the market capitalization of NFTs stood at $21 billion, up from only $91 million in early 2021.

So how do NFTs relate to this question of IP and AI?

Well, for starters, NFTs offer a secure and verifiable way to track ownership of the IP. Instead of getting lost in the abyss, creators can assign unique, digital tokens to their IP, which can then be used to prove that they are the rightful owners of the content.

If you’re a creator, that means you can keep track of your content on the internet, protect your rights, and make sure you get compensated if your content is used by AI. And if your art, music, or writing is used without permission, you’ll actually have some legal ground to stand on.

That’s because the Digital Millennium Copyright Act (passed in 1998) allows for “take-down” notices to be sent by digital companies. When one person has been accused of violating the other’s intellectual property rights, these notices provide an enforcement mechanism.

A crucial step to that enforcement, however, is proving ownership of the IP. That’s where NFTs come in since NFT ownership is secure and verifiable.

All this said, AI systems and entities using AI systems still have an ethical responsibility to not use IP without permission. Even if the victim can prove ownership, receiving compensation and credit for their work can be a lengthy process requiring the help of a lawyer.

The good news is that NFTs can actually promote the ethical use of AI by making it easier for organizations and individuals to identify and obtain permission to use IP in their training data. If an artist creates an NFT, it’s easy to trace ownership back, request permission, and provide compensation.

With billions of pieces of intellectual property out there, though, we have a long way to go before NFTs can completely solve this problem. The use of NFTs can definitely help create a more transparent and equitable system for training AI models with IP, but both the artists themselves and the companies developing AI have a responsibility to protect creators’ rights.

The ethical considerations of large language models

Option B: Are large language models biased?

We’ve highlighted a few ethical challenges that come with artificial intelligence, many of which scare users away from the tools altogether. However, just as with any technology, ethical concerns can be addressed in a way that will make these tools beneficial to all humanity (or at least, that’s OpenAI’s mission).

One of the challenges of using large language models like GPT-3 for chatbots is ensuring that the generated text is appropriate and accurate, which really comes down to the data these bots learn from. For example, if a chatbot is trained on biased or offensive data, there’s a chance it will generate responses that are biased or offensive as well.

One example of bias in AI is when a system is trained on a dataset that does not accurately represent the population it will be used on. This can lead to the AI system making decisions that are unfair or discriminatory towards certain groups of people. For example, if a facial recognition system is trained on a dataset that mostly includes images of light-skinned people, it may have difficulty accurately recognizing darker-skinned individuals.

In another instance, Amazon actually stopped using AI to support its hiring process due to the fact that it exhibited sexism and other biases. Here’s how Reuters described Amazon’s AI mishap:

“Amazon’s computer models were trained to vet applicants by observing patterns in resumes submitted to the company over a 10-year period. Most came from men, a reflection of male dominance across the tech industry. In effect, Amazon’s system taught itself that male candidates were preferable. It penalized resumes that included the word “women’s,” as in “women’s chess club captain.” And it downgraded graduates of two all-women’s colleges, according to people familiar with the matter. They did not specify the names of the schools.”

Training AI on more diverse data is certainly one solution to bias, but humans will likely always have to be involved at some point in the AI’s process in order to truly prevent discrimination. The most important thing to remember here is that AI is meant to be used as a tool, not a panacea.

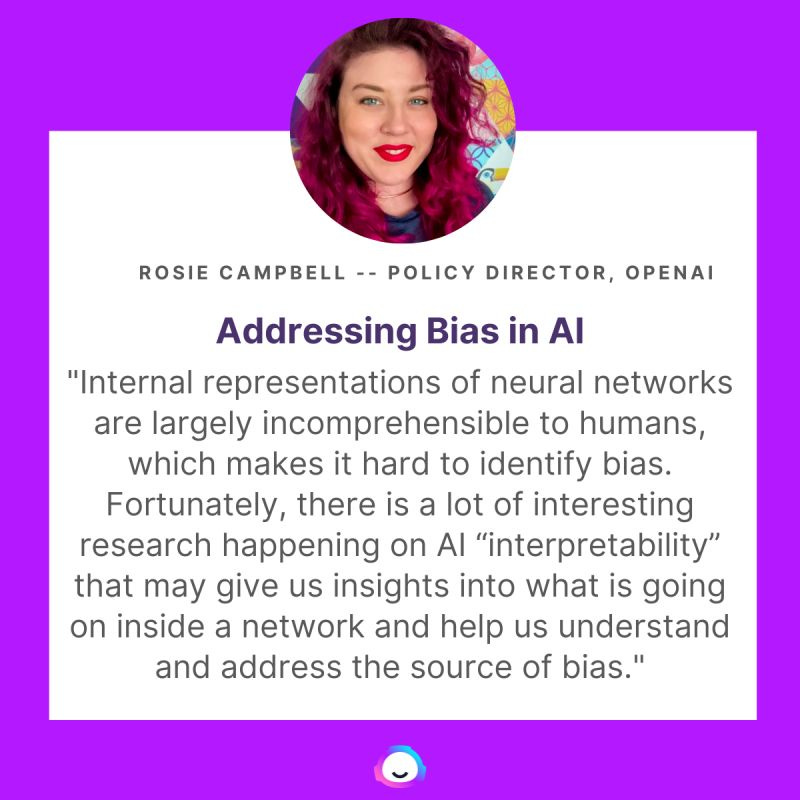

Rosie Campbell, who works on Trust & Safety at OpenAI discussed bias in AI with Jasper stating:

Another challenge for AI is the potential for misuse. For example, someone could use a chatbot powered by a large language model to impersonate another person or to spread false or malicious information. Like any tool, AI can also be utilized by criminals for a wide range of offenses, especially cybercrime. All of this could have serious consequences, demonstrating the need for careful oversight and regulation of AI technologies.

Overall, while large language models have many exciting potential uses, they definitely come with important ethical considerations. As this field advances, it will only become more crucial for organizations, including OpenAI, to develop strategies to mitigate these risks and ensure that AI is used in a responsible and ethical manner.

Large language models are transforming society, and they’re just getting started

In conclusion, OpenAI is truly pushing the boundaries of what is possible with artificial intelligence. They are continuously shaking up the world with their advancements in language models, especially with the recent launch of ChatGPT.

The future potential of large language models is exciting, and their impact on society is sure to be vast. Regardless of the ethical challenges and controversies, OpenAI is holding firm to its mission to advance the field of AI and ensure it can benefit all humanity.

While the recent breakthroughs in AI feel new & exciting, it feels like we’ll experience a few AI hype cycles before the technology really feels larger than life. There’s no denying that ChatGPT is already changing the way we work, but the real innovation will come when the compounding innovation reaches an exponential breakthrough that changes our lives forever & ever.

Thanks for reading — until our next adventure.

Special thanks to my friend Haley Davidson for copy help & edits and mama Schroeder for additional edits (any typos are on them 😊).

Disclaimer: This month’s edition of The API Economy has no direct affiliation with Circle or Plaid. I am employed by Circle at the time of this writing. But, the views in this essay are my own personal opinions and don’t necessarily represent the views of Circle or OpenAI.